If you were planning a trip to a far-flung spot, you’d likely map the route, figuring out how to get from Starting Point A to Destination Point D and identifying what combination of planes, trains, and automobiles would take you through Points B and C.

Well, maybe for vacation travelers. But not always, it seems, for voyagers of a different sort: organizations that embark on an effort to solve a thorny civic or social problem in the hope that this can lead to good outcomes for those affected. Too often, the would-be problem-solvers fail to clearly define the issue that concerns them—their departure Point A, you might say—and then plot out the path that will take them to intermediate progress—Points B and C—and, finally, a solution and the benefits it reaps, Point D.

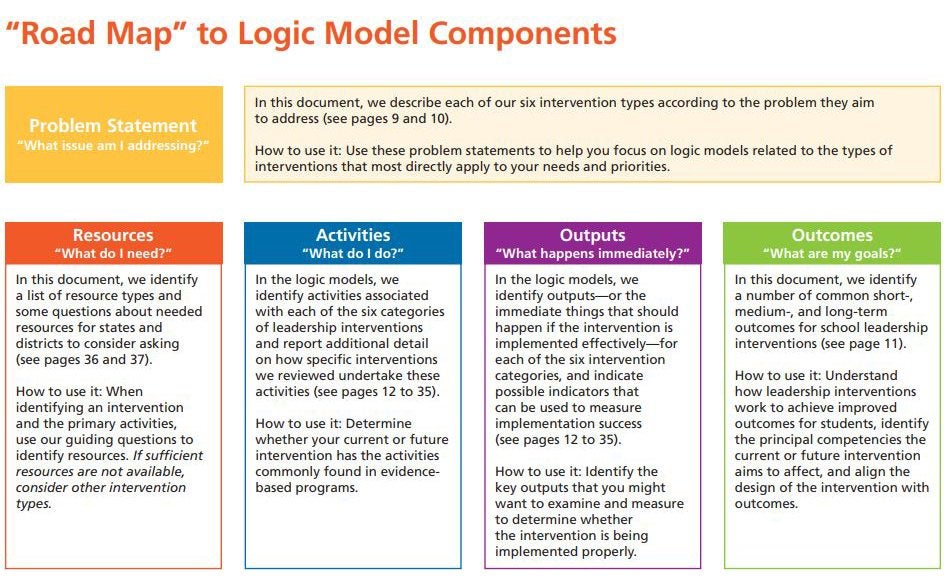

That’s where a “logic model” comes in. No, the term refers neither to a brainiac runway star nor a paragon of rationality. Rather, a logic model is a kind-of map, says Wallace’s former research director Ed Pauly, showing “why, logically, you’d expect to get the result you are aiming for.”

Logic models are on the minds of people at Wallace these days because of a RAND Corp. guide we published recently to assist states and school districts planning for funding under the Every Student Succeeds Act (ESSA), a major source of federal support for public education. The guide focuses on initiatives to expand the supply of effective principals, especially for high-needs schools. It describes how logic models can show the guardians of ESSA dollars that six types of school leadership “interventions” have a solid rationale, even though they are not yet backed by rigorous research.

Take, for example, one of the six interventions: better practices for hiring school principals. The logic model begins with a problem—high-needs schools find it difficult to attract and retain high-quality principals—and ends with the hoped-for outcome of solving this problem: “improved principal competencies→ improved schools→ improved student achievement,” as RAND puts it. In between are the activities thought to lead to the end, such as the introduction of new techniques for recruiting and hiring effective principals, as well as the short-term results they logically point to, such as the development of a larger pool of high-quality school leader candidates.

Helpful as they may be for persuading the feds to fund a worthy idea, logic models may have an even more important purpose: testing the assumptions behind a large and expensive undertaking before it gets under way. The roots of this notion, Pauly says, go back to 1990s and the community of researchers tasked with evaluating the effects of large, complex human services programs.

As Pauly explains it, researchers were feeling frustration on two fronts—that programs they’d investigated as whole appeared to have had little impact and that the reasons for the lackluster showing were elusive. “It was a big puzzle,” Pauly says. “People were trying innovations, and they were puzzled by not being able to understand where they worked and where they didn’t.”

Into this fray, he says, entered Carol Hirschon Weiss, a Harvard expert in the evaluation of social programs. In an influential 1995 essay, Weiss asserted that any social program is based on “theories of change,” implied or stated ideas about how an effort will work and why. Given that, she urged evaluators to shift from a strict focus on measuring a program’s outcomes to identifying the program operators’ basic ideas and their consequences as the program unfolded.

“The aim is to examine the extent to which program theories hold,” she wrote. “The evaluation should show which of the assumptions underlying the program break down, where they break down, and which of the several theories underlying the program are best supported by the evidence.” She also urged researchers to look at the series of “micro-steps” that compose program implementation and examine the assumptions behind these, too.

Weiss identified a number of reasons that evaluators might want to adopt this approach; among other things, confirming or disproving social program theories could foster better public policy, she said. But Weiss also made strikingly persuasive arguments about why partners in a complex social-change endeavor would want to think long and hard together before a program launch—including that reflection could unearth differences in views about a program’s purpose and rationale. That, in turn, could lead to the forging of a new consensus among program partners, to say nothing of refined practices and “greater focus and concentration of program energies,” she wrote.

There could be a lesson in this for the complex array of people involved in efforts to create a larger corps of effective principals—school district administrators, university preparation program leaders and principals themselves, to name just few. They may give themselves a better chance of achieving beneficial change if they first achieve a common understanding of what they seek to accomplish.

In other words, it helps when everyone on the journey is using the same map.

*The title of Weiss’s essay is Nothing as Practical as a Good Theory: Exploring Theory-Based Evaluation for Comprehensive Community Initiatives for Children and Families.